No Fluff, Just the Good Stuff: The Key Insights from ALDI(Unifying and Improving Domain Adaptive Object Detection) paper with code

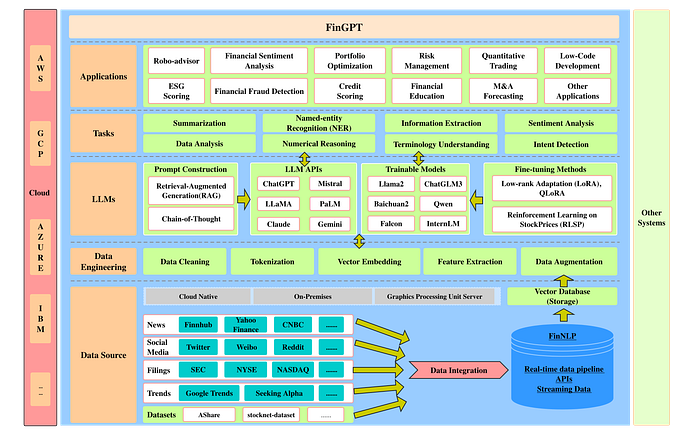

Domain: This paper focuses on Domain Adaptive Object Detection (DAOD), where object detectors perform poorly on data that are different from the training set. ALDI tackles this problem using two-stage detectors based on Faster R-CNN. ALDI developers mentioned that it can be extended to support other type of detectors.

The paper:

- Introduces a unified framework and benchmark for Feature Alignment and Knowledge Distillation.

- Proposes a training and evaluation protocol for DAOD.

- Releases CFC-DAOD, a new dataset for DAOD benchmarking.

- Enhances DAOD methods with a new approach called ALDI++.

The ALDI framework is built using Detectron2.

Key Terminology

Source-only Model (Baseline) :

Trained only on the source dataset.

Oracle Model:

Trained on the target dataset with full supervision.

DAOD Goal :

Minimize the gap between the Baseline model and Oracle model without supervised training on the target domain.

DAOD :

In DAOD, there are many approaches to solve the gap. The most famous methods are : Feature Alignment, and the other one is Self-Distillation.

Note: ALDI combines both in a single framework.

- Feature Alignment — Reduces the distribution gap between the source and target domain.

- Self-Distillation — Uses a Teacher-Student model for knowledge transfer.

Feature Alignment

- The goal is to minimize the distribution difference between the source domain (labeled dataset) and the target domain (unlabeled dataset).

- It can be done either at the image level or feature level

Self-Distillation (Mean Teacher Approach)

Also known as self-training, where a Teacher model generates predictions for the Student model to learn from.

How It Works:

- A Teacher model predicts on the target dataset.

- The predictions act as labels for the Student model.

- The Teacher model is updated using an Exponential Moving Average (EMA) of the Student model’s parameters.

- This approach is called Mean Teacher in DAOD.

Other Known Mean Teacher Techniques are:

- MTOR

- PT

- CMT

Note: Self-distillation is also used in model optimization for production, where the goal is to reduce model size while keeping accuracy close to the Teacher model.

ALDI Paper Breakdown:

Dataset and Training

- The dataset consists of labeled source data and unlabeled target data.

- Each training batch is a mix of source and target data:

B = B_source + B_target

Teacher-Student Model Training:

- Both models start with the same weights, either from ImageNet pretraining (Detectron2 Model Zoo) or from supervised pretraining on the source dataset (called Burn-in).

- The Teacher model’s weights are updated via EMA, while the Student model is trained via backpropagation.

Training Objectives (or Approaches)

The ALDI framework is modular, meaning it can enable/disable specific training objectives. There are three main training objectives(I prefer to call it approaches):

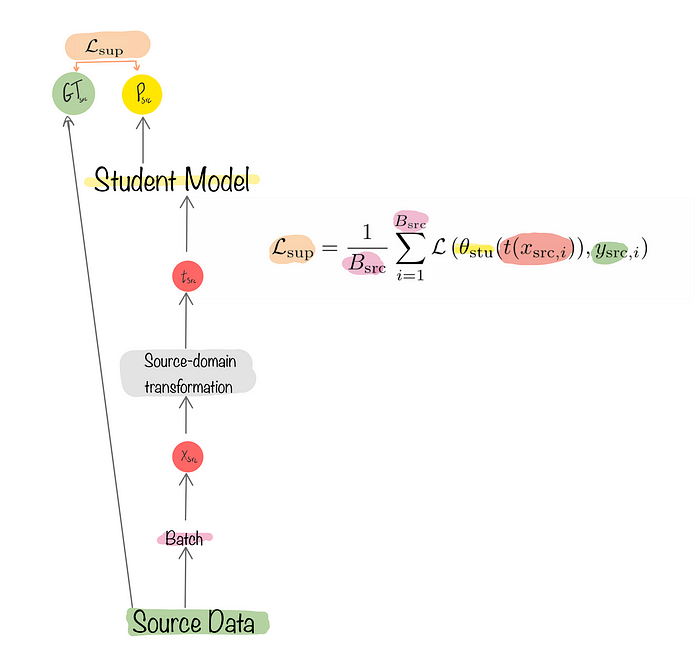

1. Supervised Training with Source Data

- Uses labeled source data.

- Applies Source-domain transformations, pass transformed data to student model, and computes supervised loss (Lsup) using Student model predictions and ground truth from target data.

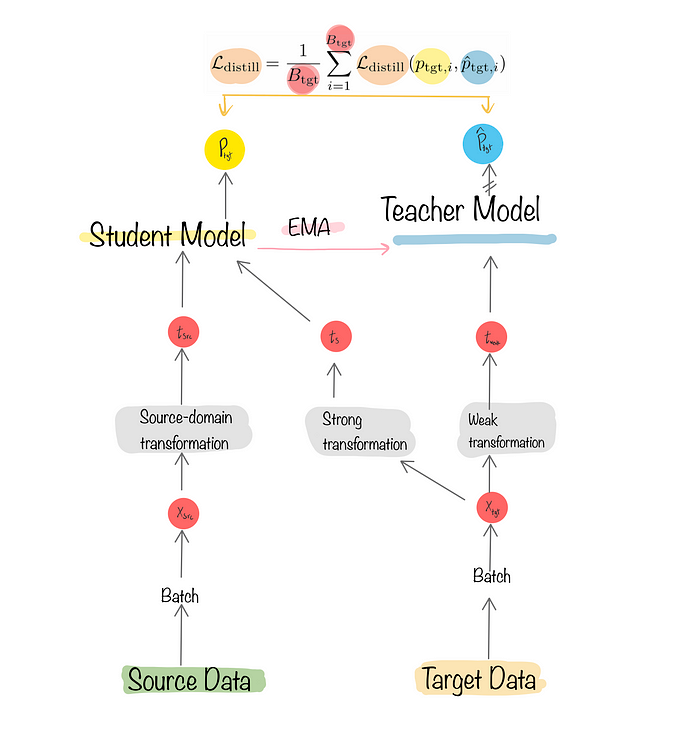

2. Self-Distillation with Target Data

- Uses unlabeled target data, create Two copies

- One apply a weak transformation → Passed to Teacher model.

- One apply a strong transformation → Passed to Student model.

- The Teacher’s predictions act as distillation targets for the Student predictions.

- Computes distillation loss (Ldistill).

- Predictions can be Soft targets (logits, softmax…etc) or Hard targets (thresholded pseudo-labels).

3. Feature Alignment

- Aligns Source and Target sample using Lalign[Purple area in above image], which basically enforce invariance across domains at either the image or feature level.

- ALDI team used two losses used: Domain-Adversarial Training (DANN), and Image-to-Image Alignment.

3.1- Domain-Adversarial Training (DANN)

- Trains a domain classifier to differentiate between source and target features.

- Simultaneously, the feature extractor learns to confuse the domain classifier, enforcing invariance across domains.

3.2- Image-to-Image Alignment

- Works in pixel space by transforming source images into the target domain or vice versa. You can think of photoshopping/translating one image to another.

- The transformed images are then used for training.

Codebase

The framework use COCO format. You can either extend LOADER to work with other formats, or convert your dataset to COCO format.

The project GitHub repository has 4 folders, which are:

.

├── README.md # Readme file

├── aldi/ # ALDI framework folder

├── config/ # YAML configuration files for building models

├── docs/ # Documentation

├── tools/ # Scripts to use ALDI framework, such as training script

├── .gitignore # Files and directories to be ignored by Git

└── pyproject.toml # configuration fileYou don’t to install and build Detectron2, the ALDI framework will install it as part of its dependencies.

Tools folder:

It has many scripts, but the most important one is train_net.py. Here, you can either start a new training or run inference/evaluation. The script is Detectron2 train script, where you pass to it YAML config file for the model you want, and the run mode (train or eval), then the code will setup the configuration using Detectron2 base configuration settings and your YAML file. Build model, and run it.

Aldi folder:

.

├── __init__.py

├── align.py

├── aug.py

├── backbone.py

├── checkpoint.py

├── config.py

├── dataloader.py

├── datasets.py

├── distill.py

├── dropin.py

├── ema.py

├── helpers.py

├── model.py

├── pseudolabeler.py

└── trainer.py Most important files are:

Aug.py:

Python code that utilize Detectron2 augmentation function, to apply weak and strong augmentation in ALDI framework.

Backbone.py:

This code defines custom functions and modifications for a Vision Transformer (ViT) backbone. ALDI by default support Resnet50 w/ FPN from Detectron2, and this code change that to support VitDet-B backbone.

Model.py:

Here, the framework extend the Detectron2 default build model functionality to include AlignMixin(Feature Alignment function), and DistillMixin(self-distilation function), create the model from configuration passed from train_net.py and move the model to either GPU or CPU.

Final Thoughts

ALDI provides a unified framework that integrates Feature Alignment and Self-Distillation. The modular design allows different DAOD techniques to be easily implemented and benchmarked.

🔗 Links:

- Framework: https://github.com/justinkay/aldi/tree/main

- Dataset: arxiv.org/pdf/2403.12029